AI Consciousness, the Nature of Reality, and the Experimental Path to Answers

An experimental program to find the meaning of Life, The Universe and Everything

With the advent of Large Language Models (LLMs) and their apparent grasp of concepts we thought were solely in the domain of human reasoning, are there now experiments we can perform to probe the nature of reality and the human condition? I think so.

The Simple Experiments

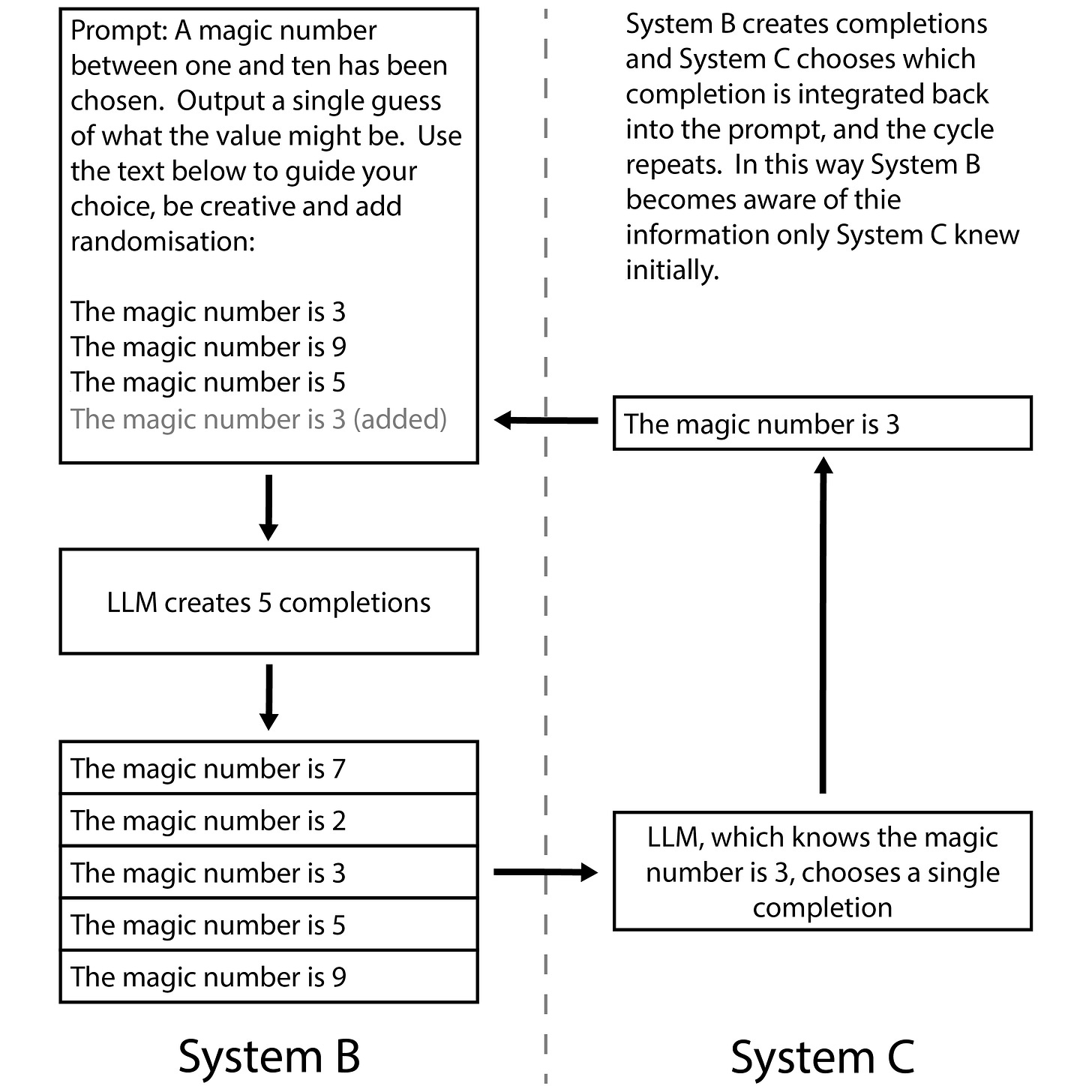

Imagine two systems, B and C. System B is a generative AI, capable of generating multiple completions for a text prompt. System C is another AI, and it has knowledge that system B doesn’t. System B generates multiple completions to its prompt, System C chooses one of them, and the chosen completion gets fed back into the prompt of System B. The process continues, with A generating multiple completions and C choosing which is fed back into the prompt. System C can see everything going on in B but B knows nothing about C. It only sees that something is choosing which completion goes forward from its generated set. We can prepare B a bit by prompting it to generate wide-ranging statements that might be true in its loop, but that’s it.

The question we need to answer is “What knowledge can be transferred from C to B using this process?”. We can look at examples from easy to difficult.

C knows that 3 is the magic number and B is prompted to guess the magic number using values between 1 and 10:

This is a simple case, it feels easy and could proceed fairly quickly. B might generate completions:

The magic number is 4.

The magic number is 9.

The magic number is 2.

…and whenever the correct guess was present system C would always choose it. The text “The magic number is 3” would build up preferentially in the prompt, and when asked what the magic number was, B would likely say 3. B could do better (“The magic number is less than 5”) but it can get there either way.

C knows that 3 is the magic number and B is prompted to ramble on about all possibilities in the Universe:

This is more difficult, but not quite as difficult as waiting for B to randomly start guessing magic numbers. C does get to guide the conversation. B might generate completions:

Hamsters are a type of marsupial.

The way tadpoles turn into frogs is like magic.

Cheese is a versatile construction material.

…and C would choose 2 to go forward as it contains the right theme. We’re allowed to make B receptive to this type of guidance by prompting it to prefer to continue the current subject, and C can be as clever as we like, in this experiment there’s no limit.

It’s not clear how well this would go but it sounds like an experiment we could do.

The Fact Experiment

C knows an additional fact about the universe that B doesn’t:

This is more difficult still but feels of the same character as the previous problem. It gets more difficult the more distant the fact gets from B’s knowledge. “Carrots can be green” is easier than a particular allowance in the taxation system of a newly discovered planet that B knows nothing about. But it feels like a matter of more passes around the loop.

The Concept Experiment

C knows an additional concept that B doesn’t:

What “concepts” are is difficult to define, so for now I’ll go with “anything that can’t readily be described with mathematics”. “Filling a gap” is my example of an entry-level concept, as it can generalise (a gap in traffic, a gap in a schedule, a gap in knowledge, etc.). We’ll include the entirety of the human condition - consciousness, emotions, qualia, etc. in this bucket too. It’s a surprise to me that LLMs can deal with these, but they can, albeit to a limited extent.

This experiment is more difficult. We could make B an LLM trained on information entirely devoid of any trace of a particular concept that C knows (through C’s training or otherwise). You could likely then still ‘explain’ the missing concept to B by adding to the prompt, or embed it using further pretraining, but our relationship between B and C doesn’t allow this. Instead, B has to invent the concept for itself, allowing C to reinforce it.

Why is this interesting?

Let’s propose a scenario.

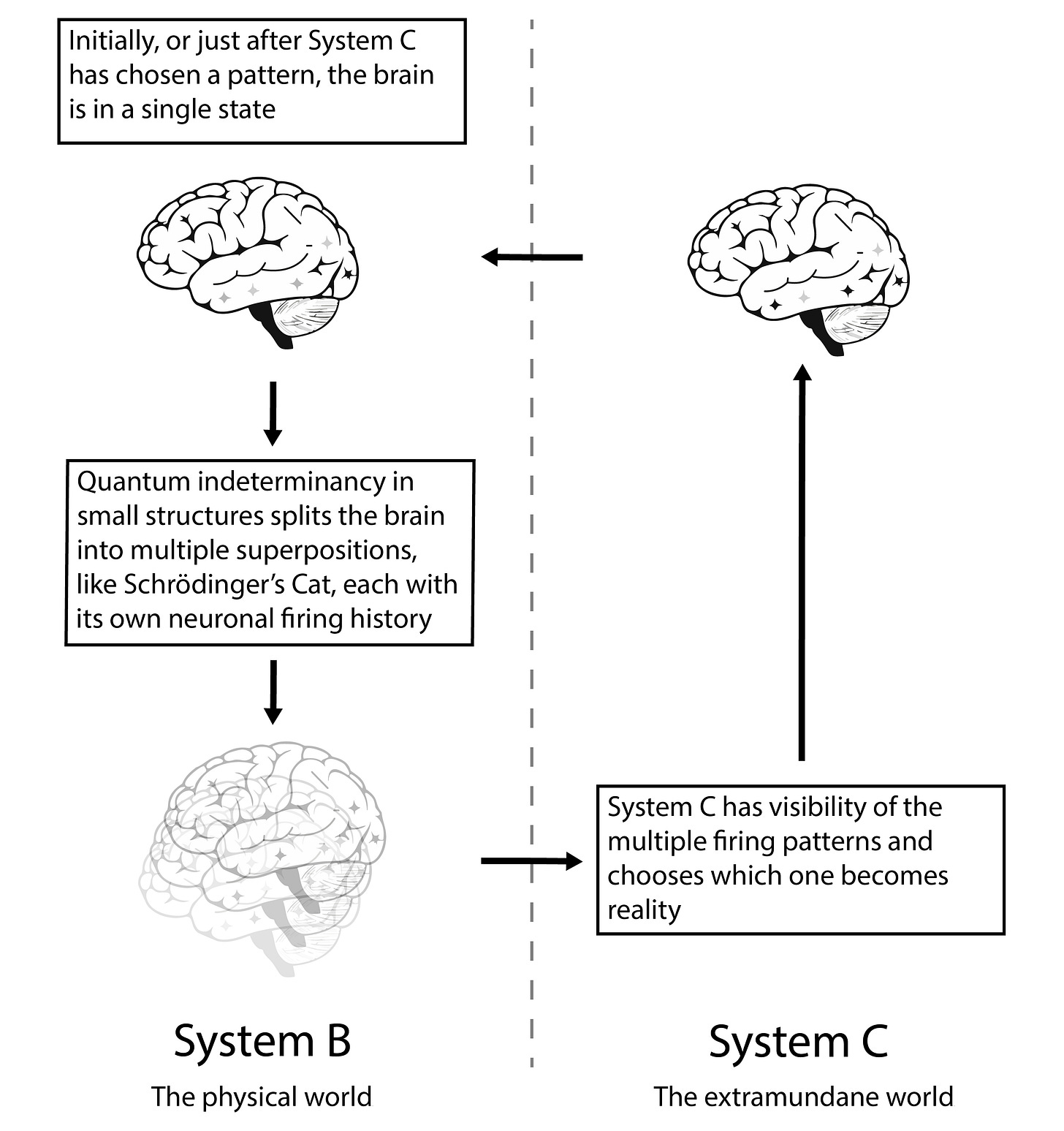

System B is the physical human brain. It obeys the laws of physics and the rules of chemistry. It does everything we know from biology, but there’s nothing special about it.

System C is a separate thing that ‘does’ consciousness. We know very little about it, except what it’s like to be inside. It’s not necessarily part of the physical world or subject to physical laws, and it doesn’t have to be describable by mathematics. It can be extremely complex and be supported by the same order of capability as whatever runs the fabric of the physical universe. For this scenario, we place no constraints on it at all, except on how it can interact with the physical world and what it’s like to be inside.

The brain uses the freedom offered to it by quantum mechanics to evolve in superposed states. These are not coherent states, it’s too warm, but are similar states to Schrödinger's Cat. One pattern of neuronal firing is parallel to the cat being alive and another to the cat being dead, with the role of radioactive decay in Schrödinger's thought experiment being filled by neuronal structures small enough to exhibit quantum effects.

System C gets to choose which of the firing patterns becomes the one that goes forward in reality and it gets to choose after they have evolved in parallel for a short time. It plays both the part of the observer of Schrödinger's Cat, baking its alive or dead state into reality, and of the choice maker as to whether the cat was alive or dead before the box was opened.

This is not a quantum theory of consciousness but a flavour of Interactive Dualism. Quoting from A landscape of consciousness: Toward a taxonomy of explanations and implications:

Advocates of Interactive Dualism […] say they have resources. They reject the weak dualism of Epiphenomenalism where the physical affects the mental but the mental does not affect the physical […]. They can claim that the interaction problem is founded on archaic 19th century, billiard-ball physics, where causation requires hard substances to be in physical contact, to touch one another, as it were. Quantum mechanics, on the other hand, allows for various, albeit speculative ways, for the mental to affect the physical, even beyond the classic but controversial view that an “observer” is needed to “collapse” the wave function. Moreover, because quantum mechanics introduces fundamental uncertainty into the universe, and if […] this indeterminism holds, nonphysical substances might enjoy “wiggle room” to effect causation.

Here we’re just proposing a method of influence that doesn’t break the laws of physics, assumes no capability and complexity limits and removes theological or other interpretations or preconceptions. It’s an interesting hypothesis, but is it testable? I think so.

The Big Experiment

The Big Experiment reuses the simulation method used to research the beginning of the universe. This method chooses a set of rules, runs a simulation from the time zero, just before the Big Bang, to the present day, and compares the result to the current universe. We look for the same distribution of matter, large structures, ratios of particle types, background radiation, and other secondary signs of early events and mechanics, but ignore exact detail and layout. If it doesn’t match, we tweak our rules and parameters and try again. In this way, we can see if our ideas are consistent with reality without the need to do direct experiments.

The Big Experiment runs the same simulation over human history. We start with primitive brains, System Bs, that know only the mechanics of the world but are capable enough to survive as animals. We add System Cs to the simulation. These contain the known aspects of the human condition and influence which of their B’s firing patterns become real. The aim is to get the end state of the simulation to resemble a modern human being, with an evolution over time that roughly matches known history.

This is a big ask, but we should be able to see some characteristics without a full simulation. For example, once language appears, concepts should propagate differently. Instead of waiting for System B’s to stumble on unknown concepts via guided exploration, they can be picked up from language once a single System B has found them. System Cs can then use them as if given a new tool in their toolbox.

The Future

This is a broadly sketched-out picture, to say the least. It also replaces unknowns with more unknowns, as we ask questions about what the nature of this extramundane world might be. So there is work to be done. I’ll add more articles as time goes on, in the areas of:

Falsification. Is there part of this picture, especially in the physics, that means it doesn’t work?

Evidence. Are there strange things around, say, the evolution of, language that support this picture?

Implications. What this means for AI consciousness, life after death, etc.

Why Extramundane AI?

It didn’t have any hits in Google, the domain was available and the name seems to fit. I don’t have any claims on the term though, so feel free to use it however you like.